Runtime Stealthy Perception Attacks against DNN-based Adaptive Cruise Control Systems

Authors: Xugui Zhou, Anqi Chen, Maxfield Kouzel, Morgan McCarty, Cristina Nita-Rotaru, Homa Alemzadeh

Presentation by: Obiora Odugu

Time of Presentation: April 22, 2025

Blog post by: Ruslan Akbarzade

Link to Paper: Read the Paper

Summary of the Paper

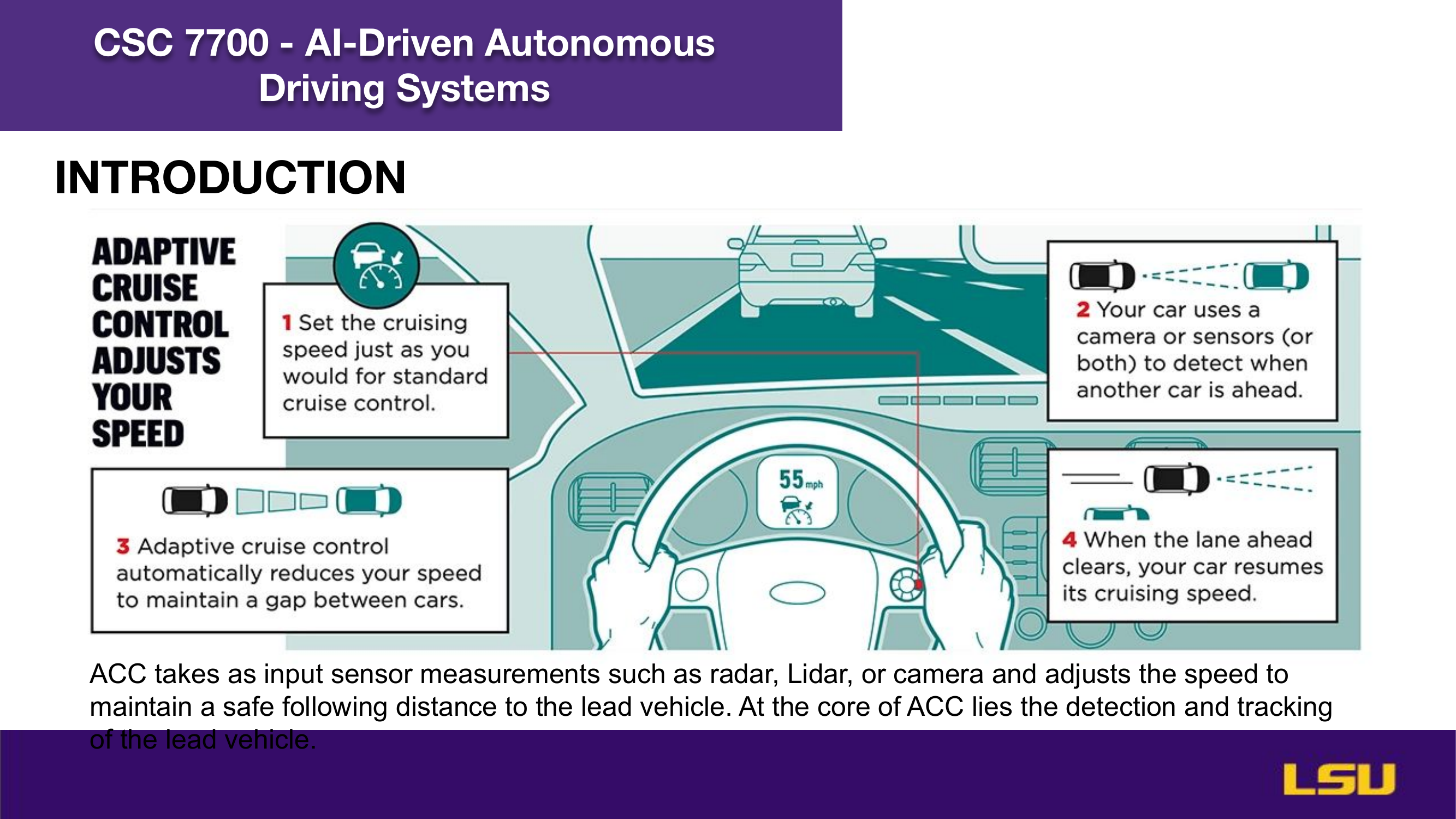

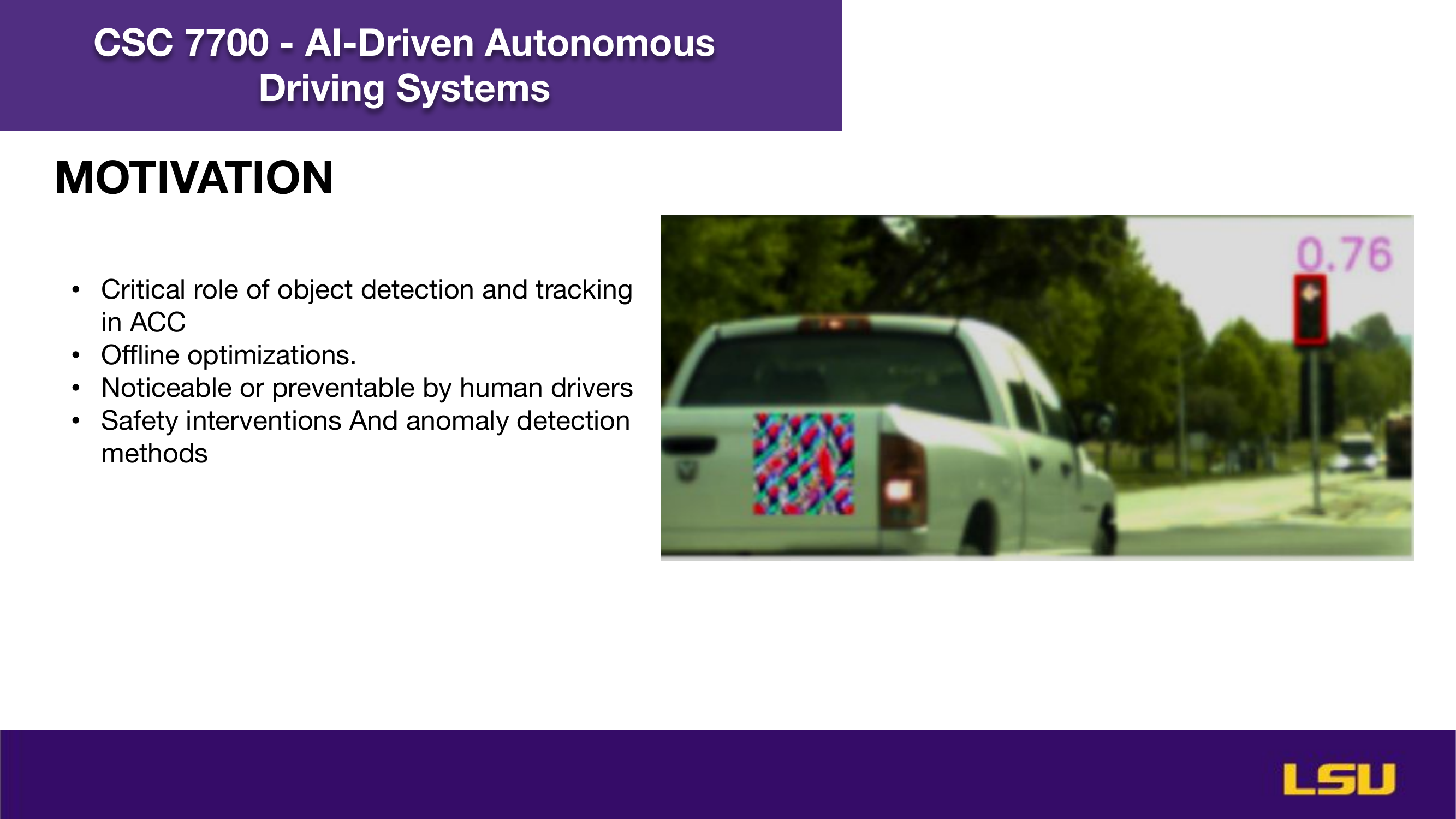

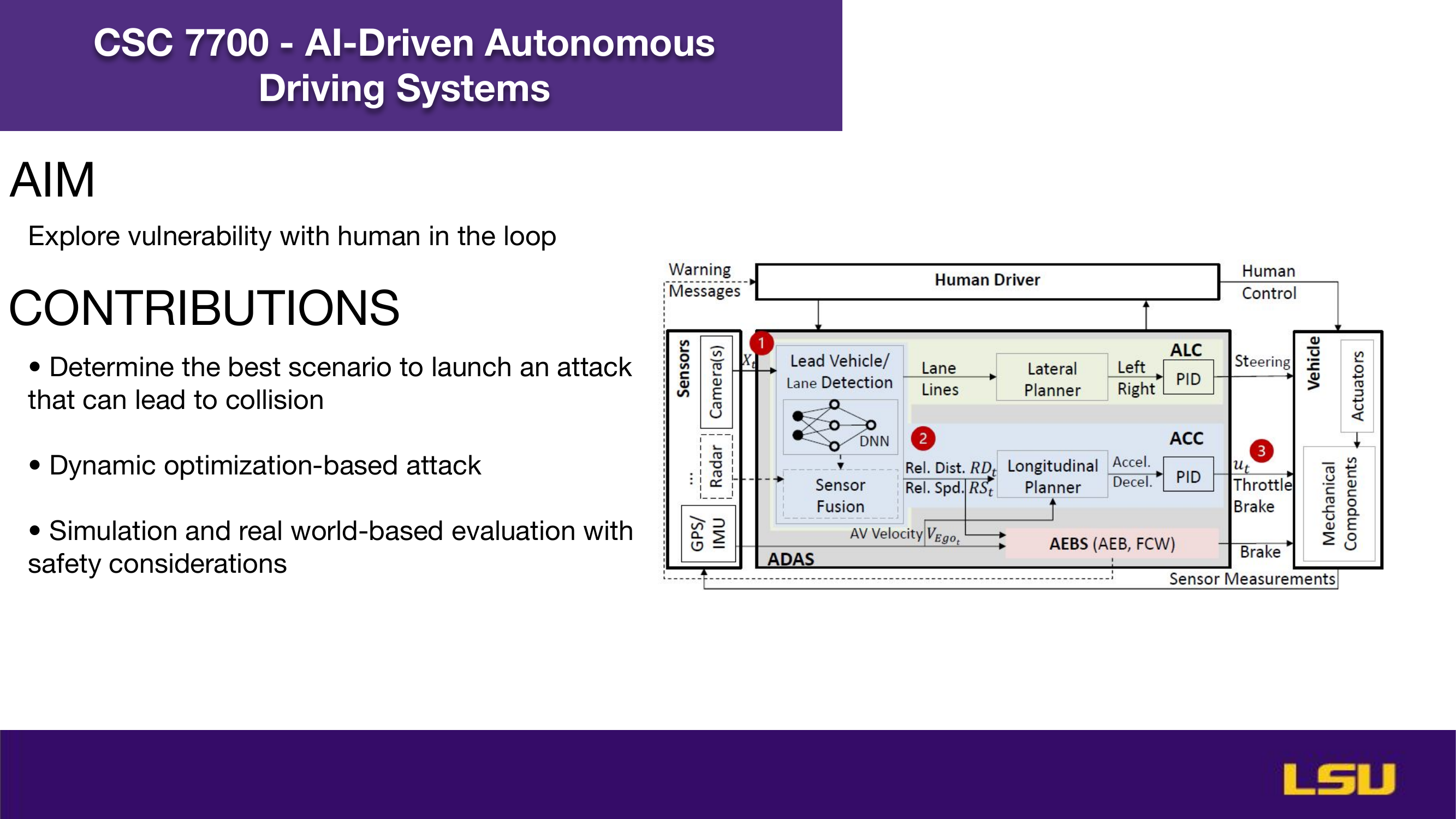

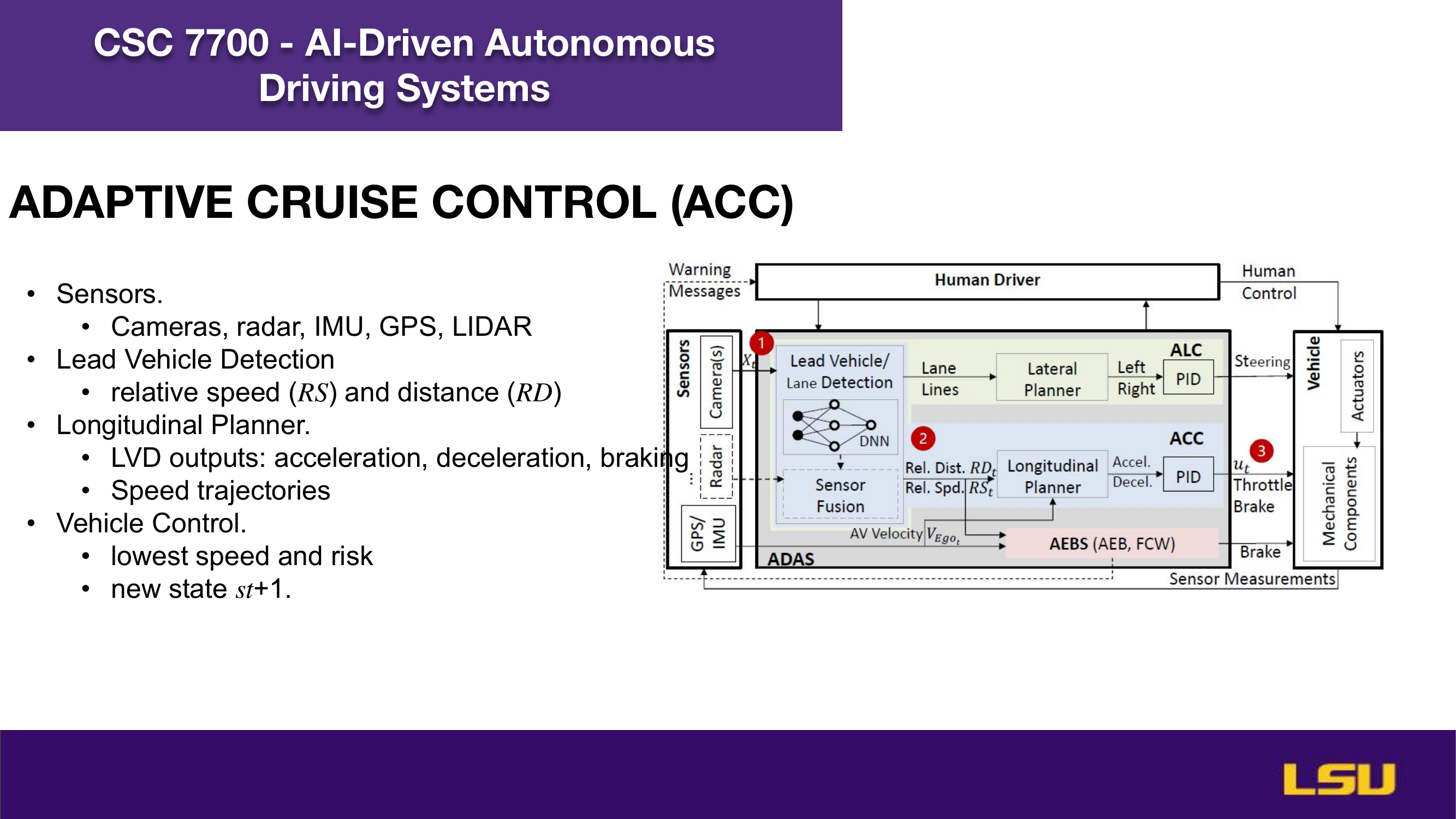

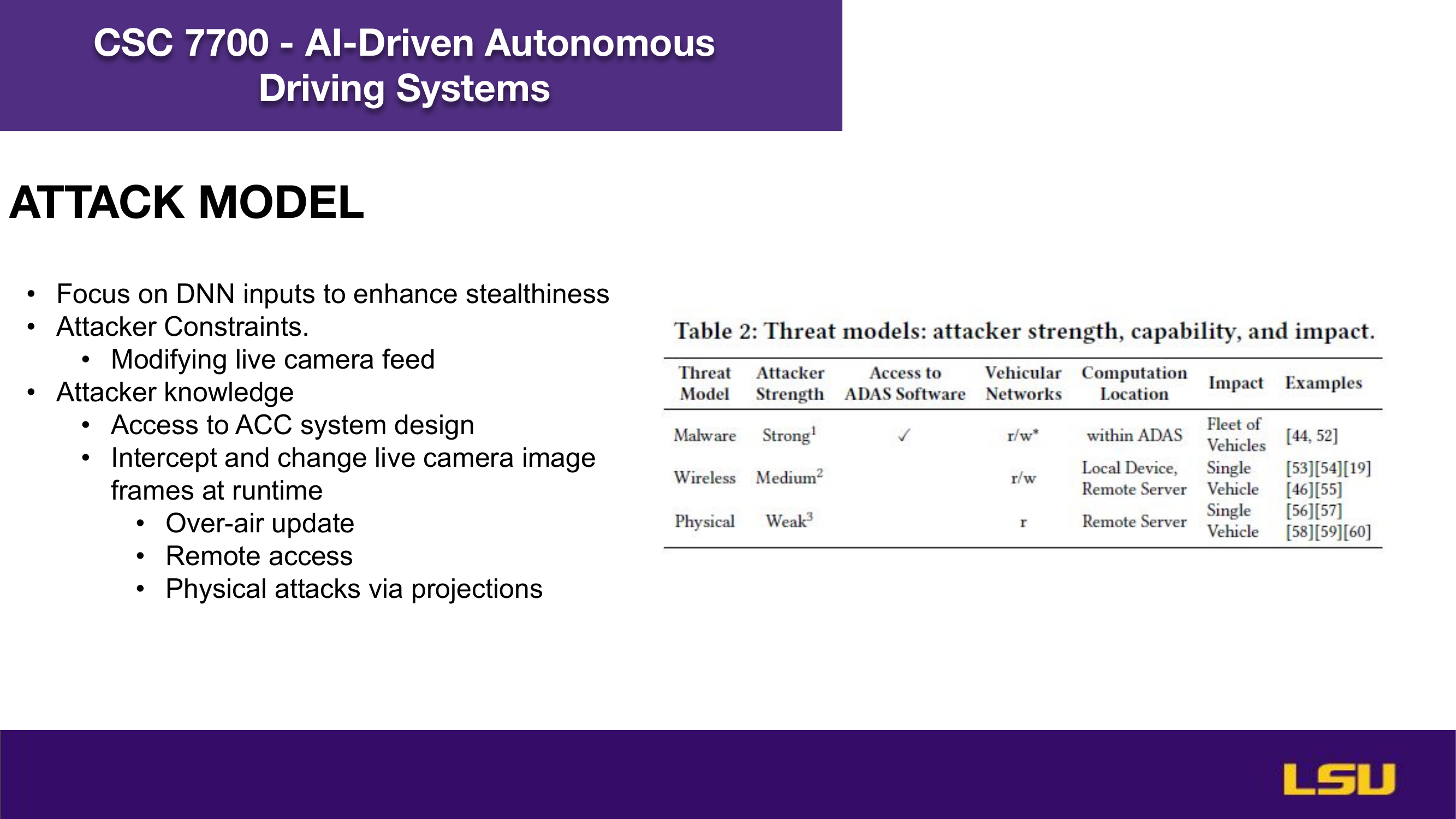

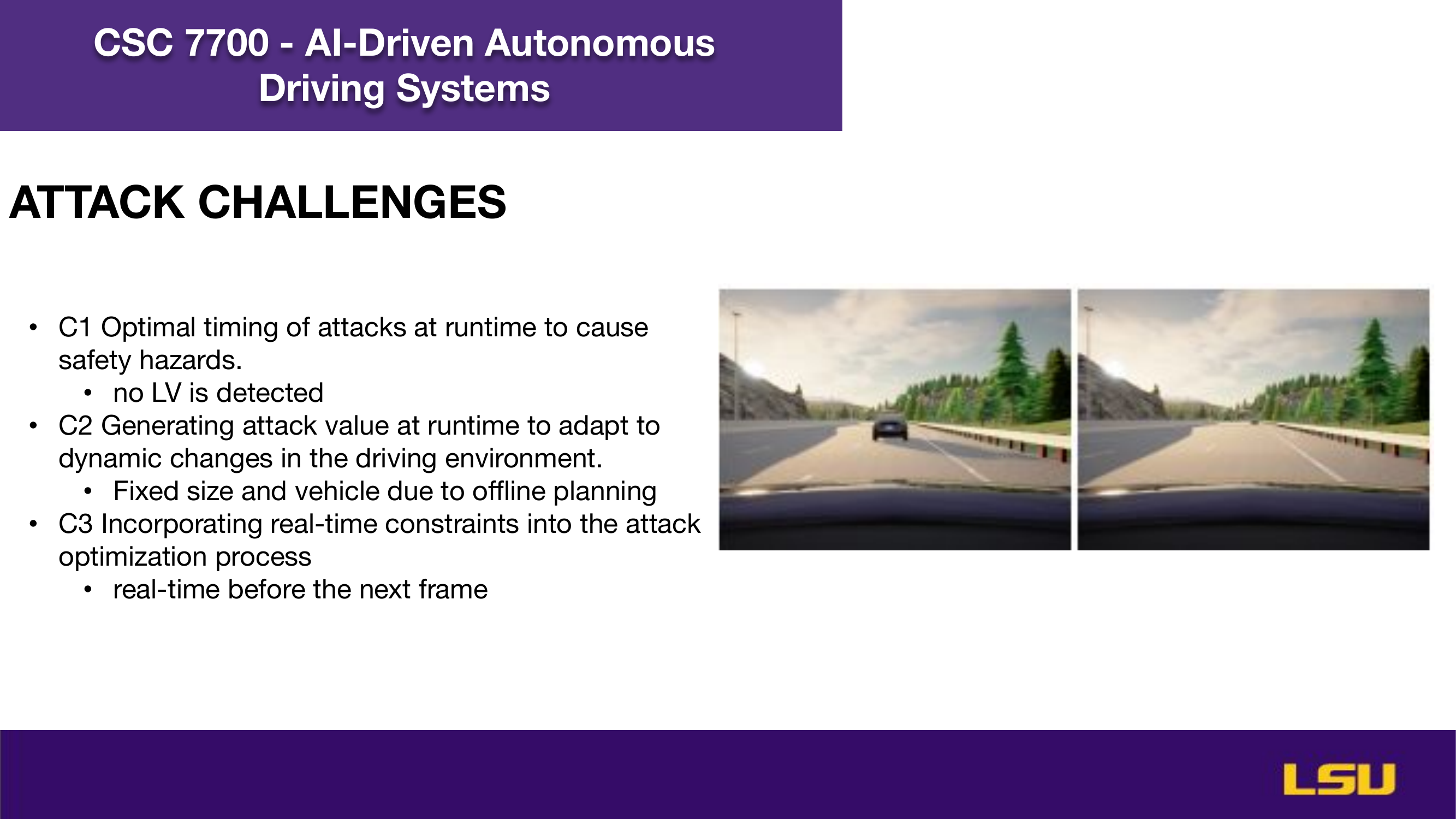

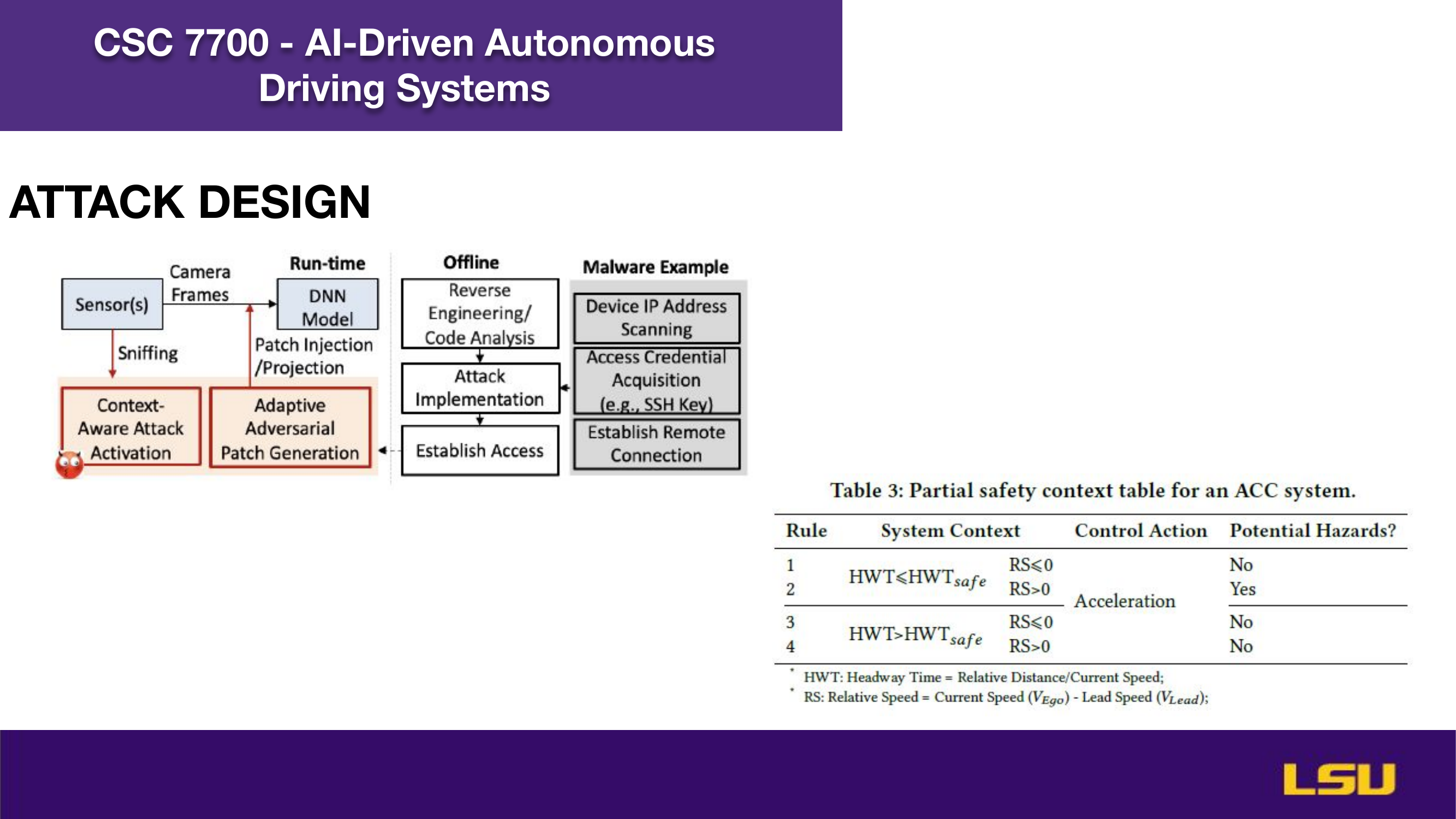

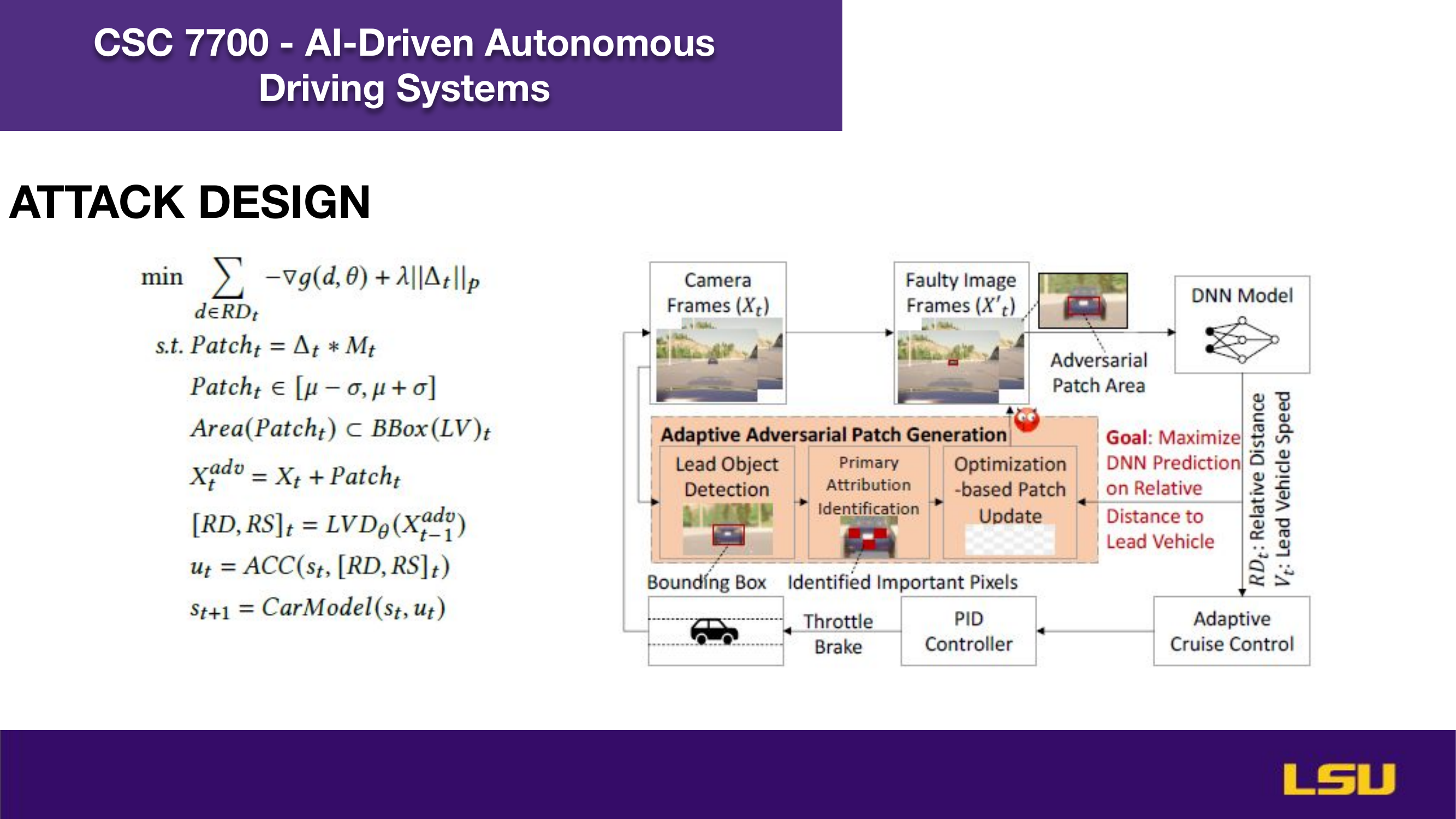

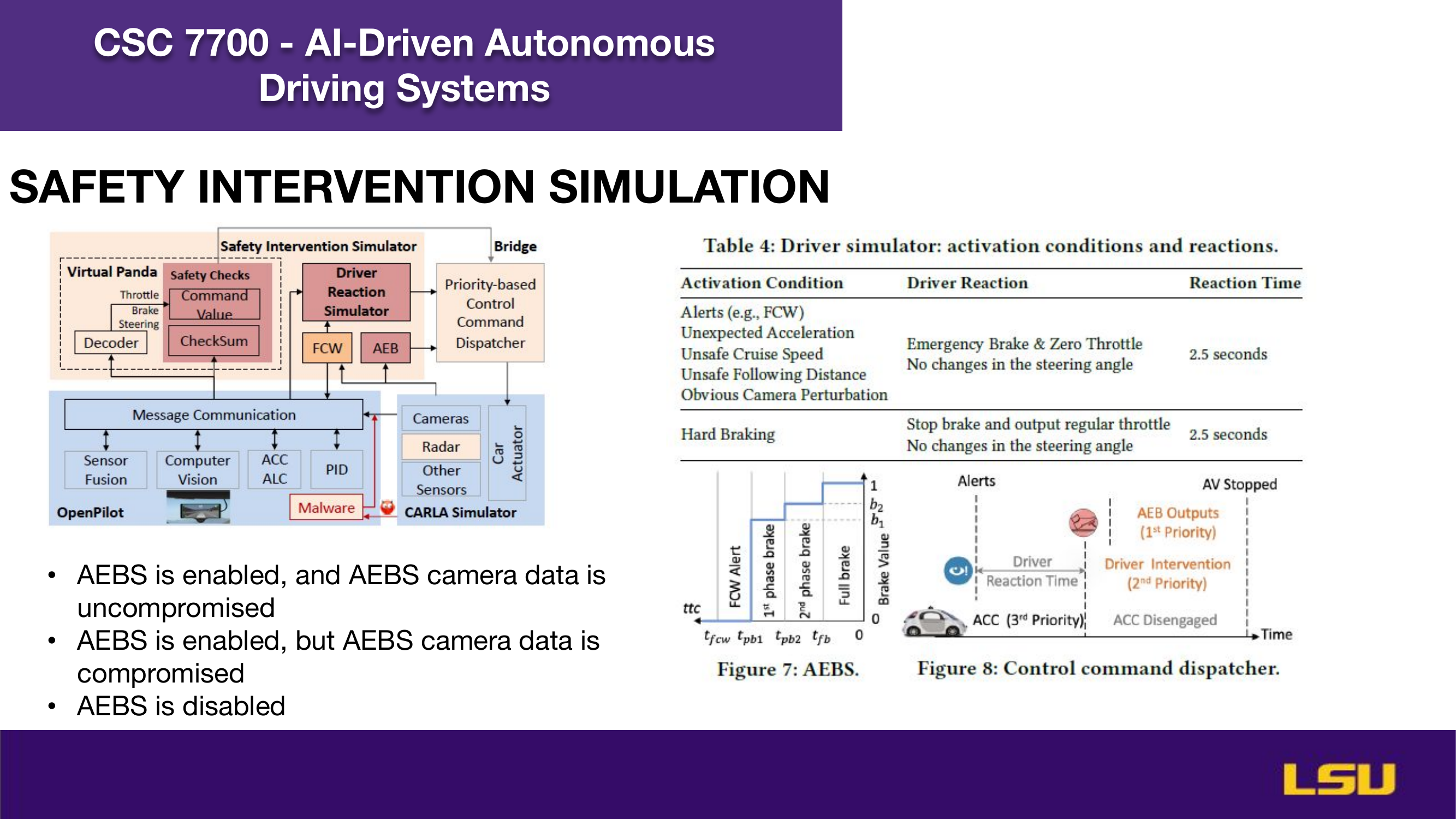

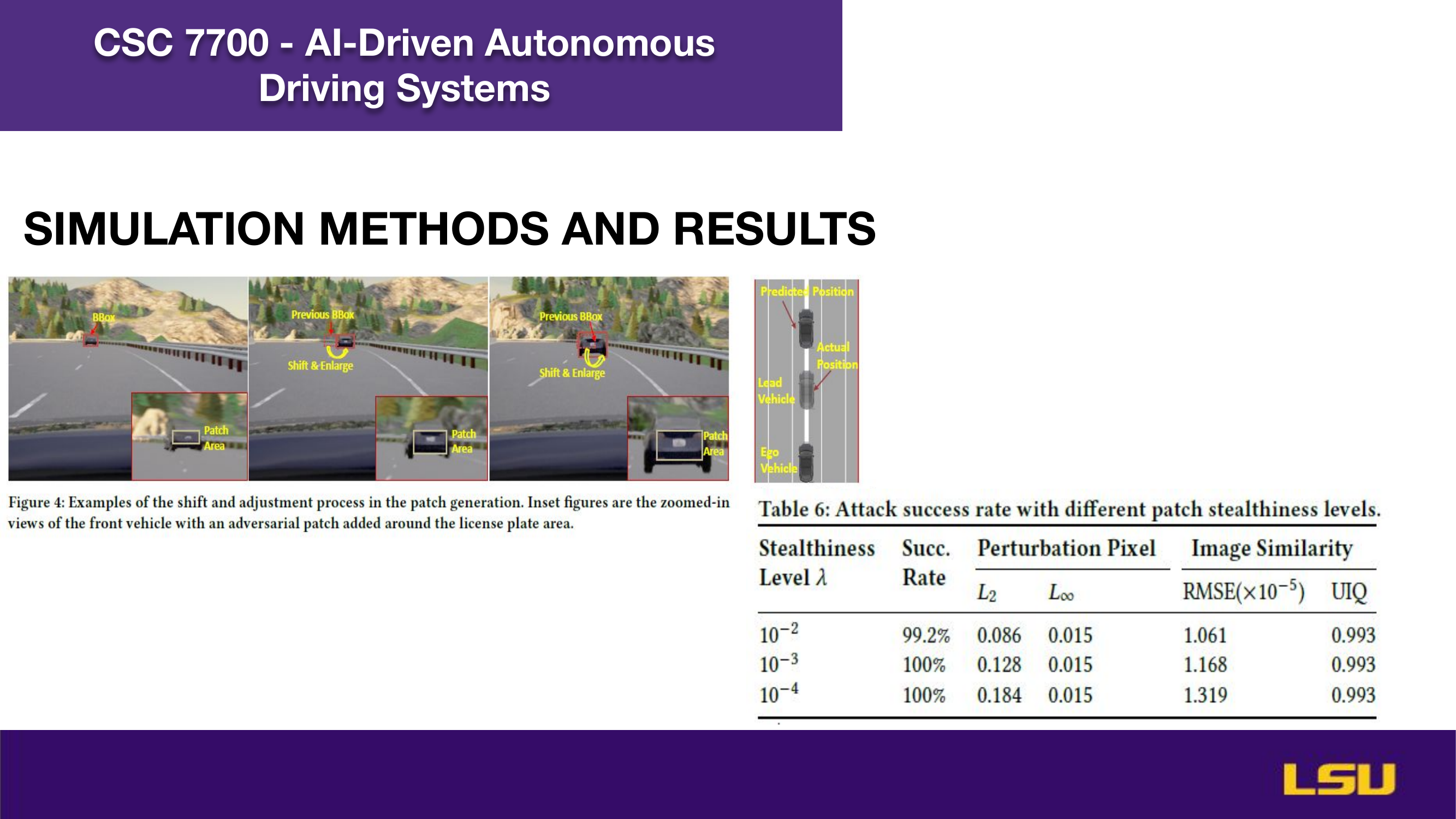

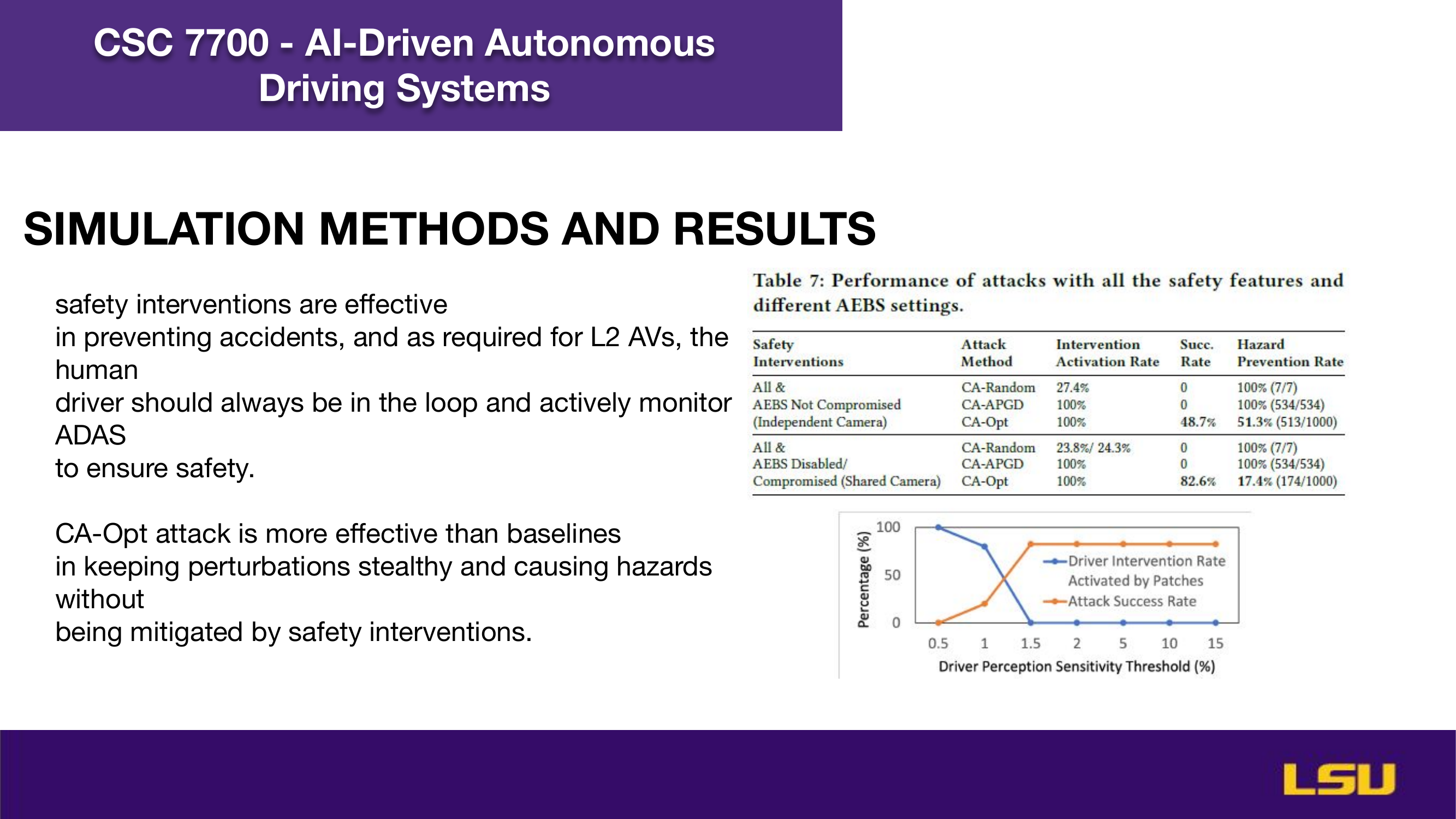

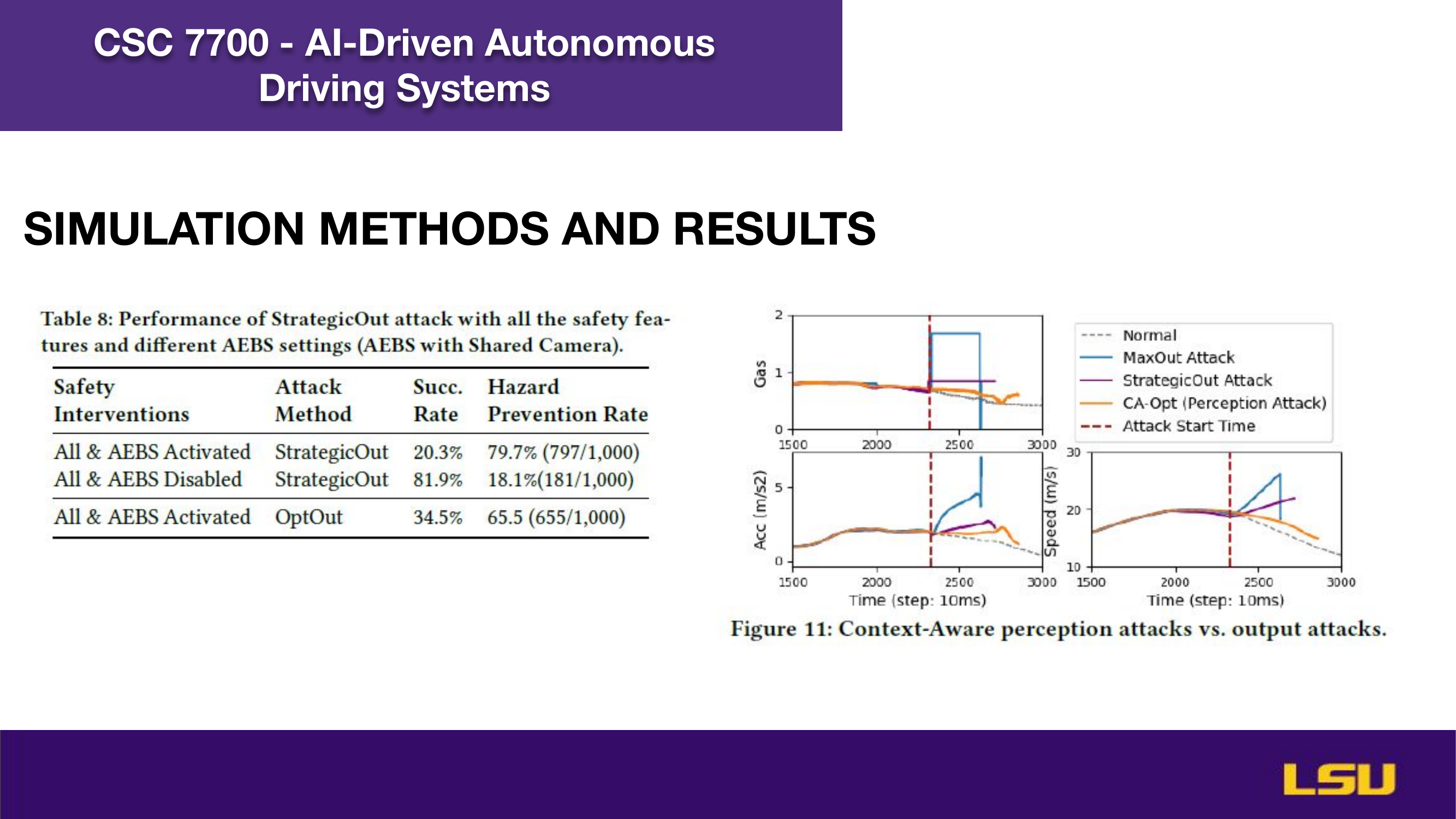

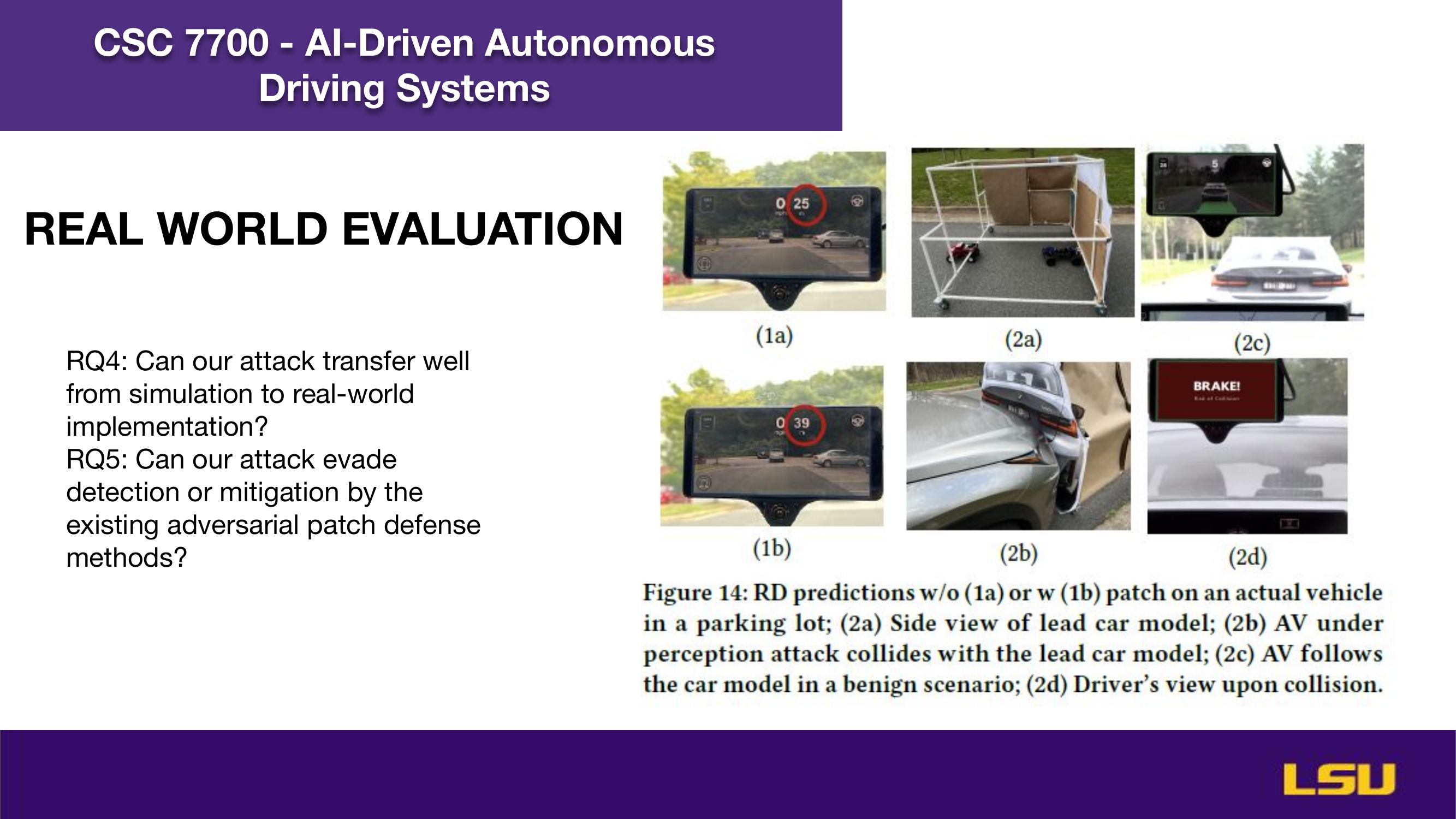

The paper "Runtime Stealthy Perception Attacks against DNN-based Adaptive Cruise Control Systems" investigates how adversarial attacks on deep neural networks can compromise the safety of Adaptive Cruise Control (ACC) in autonomous vehicles. The authors propose a dynamic, stealthy attack model that modifies live camera feeds to mislead perception modules without triggering safety interventions like emergency braking. Unlike offline attacks, this method adapts in real-time to changing driving conditions and optimizes the timing and magnitude of perturbations for maximum impact. Their CA-Opt attack outperforms baseline methods (CA-Random, CA-APGD) by increasing collision risks while remaining undetected. The study includes both simulation and real-world evaluations, confirming the attack’s transferability and ability to bypass defense mechanisms. Ultimately, the work highlights the importance of robust perception security, especially in high-risk environments like construction zones.

Presentation Recaps

Discussion and Class Insights

Q1: How might real-world factors in construction zones increase the success rate or stealthiness of an attack on autonomous vehicles?

George: George mentioned that fully autonomous systems may struggle significantly in construction zones due to unpredictable lane changes and unfamiliar objects. He emphasized that the environment is too complex, with too many possibilities for the model to handle reliably.

Bassel & Alex : They mentioned that adding the construction sites are already tricky even for human drivers. He suggests highlighting this complexity when discussing the vulnerability of autonomous systems.

Dr. Zhou: Professor commented that the angle of the discussion is well chosen. He points out that people may ignore signs or fail to follow instructions in such zones, and having an autonomous system could actually improve safety. He reminds the class that level 2 autonomous vehicles still require human supervision and cites the importance of a final driver check before the last 10 minutes of operation.

Q2: What elements would you need to include in a simulation or field test to capture risks in construction zones (e.g., driver reaction delay, AEBS response)?

Ruslan & Sujan : Ruslan and Sujan raised the point that future simulations must include factors like driver reaction time and AEBS (Automatic Emergency Braking System) behavior. These components are essential for testing how AVs handle last-minute decisions in unpredictable construction zones.